AirBnB Reviews Helpfulness Classifier

Overview

This project aims to create a review helpfulness classifier to determine the helpfulness level of AirBnB reviews for the listings. Our project fine-tuned FacebookAI/roberta-base model for multi-class text classification. More technical details explained via the links.

Key techniques : Fine-tuning LLM, Web Scraping

Links :

Code on GitHub : lihuicham/airbnb-helpfulness-classifier

Fine-tuned model : lihuicham/airbnb-reviews-helpfulness-classifier-roberta-base

Implementation

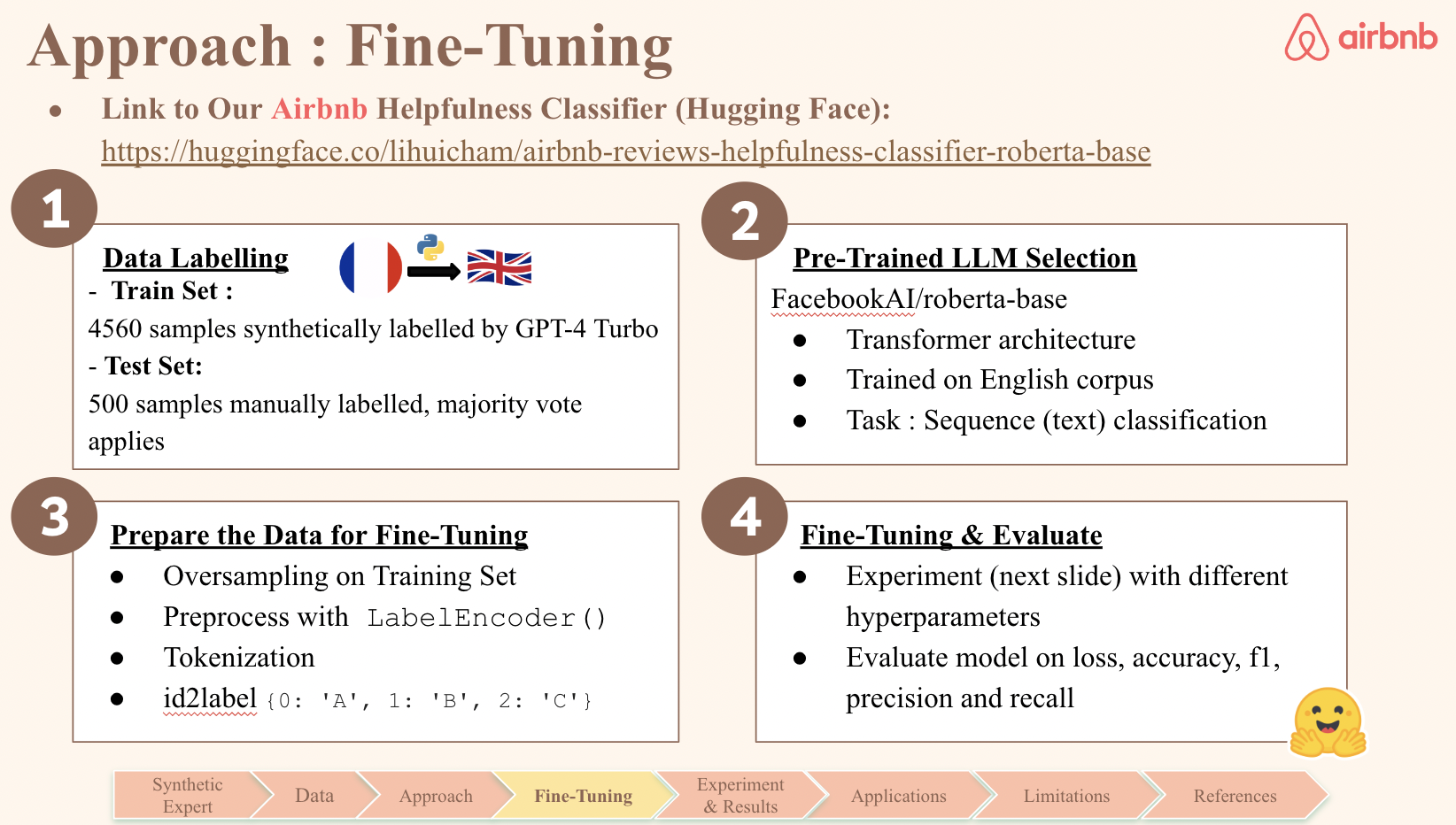

- Web Scraping. Scrape AirBnB listings for their reviews and translate them from French to English using Python.

- Data Labelling. Training dataset is synthetically labelled using GPT-4 Turbo from OpenAI API and evaluation dataset is manually labelled by the team.

- Data Preprocessing. Oversampling to deal with imbalanced dataset and word tokenization.

- Fine-tuning. A pre-trained LLM - RoBERTa is fine-tuned using the

Transformerslibrary from Hugging Face in PyTorch. Model is evaluated on accuracy, precision, recall and F1 metrics.

hyperparameters = {'learning_rate': 3e-05,

'per_device_train_batch_size': 16,

'weight_decay': 1e-04,

'num_train_epochs': 4,

'warmup_steps': 500}

Main Challenges

- Formulate problem to apply data science skills in creating a synthetic expert for a business use case.

- Research in various state-of-the-art LLMs to determine the most suitable pre-trained model for the project.

- Understand fine-tuning steps and implement the concept in code, with reference to online resources.

- Limitation in computational resources - training time and cost.

My Contributions

- Team leader - set project timeline and deliverables for each team member.

- Main task was to complete the fine-tuning part of the project.

- Key presenter for our team’s approach and methodologies in class.

Tech Stack

Python, OpenAI API, PyTorch, Transformers

Outcome & Impact

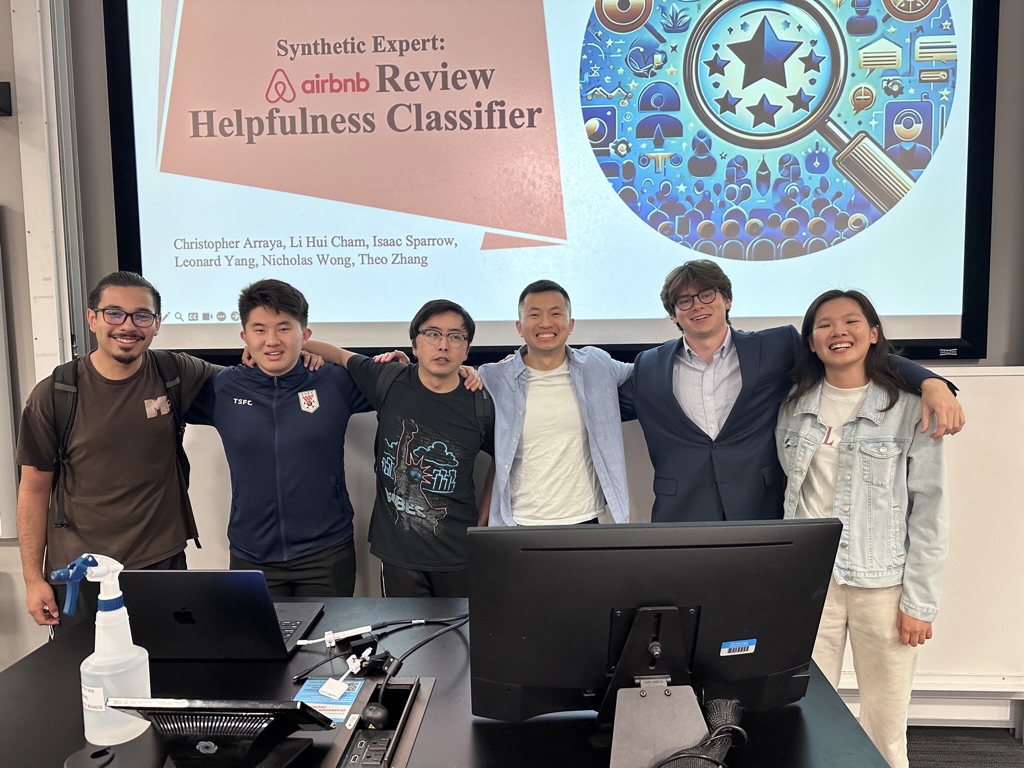

I really enjoyed the project and it was an absolute highlight of my exchange journey at University of North Carolina, Chapel-Hill. All team members went above and beyond in collaborating on the project and great friendships were made during the process. Personally, it was my first time fine-tuning a LLM and even with past experience in building an end-to-end machine learning project, I still find this project a great learning opportunity.

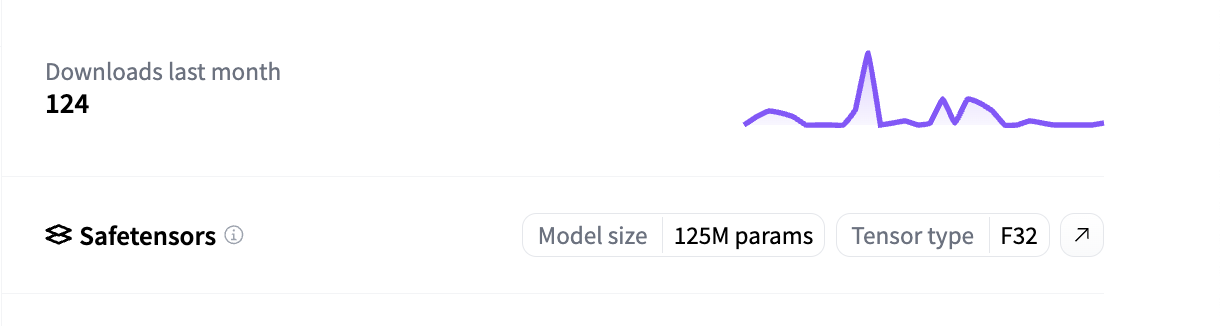

As of May 2024, the fine-tuned model received over 100 downloads on Hugging Face! On average, most “reviews” models have less than 30 downloads on HF. Our AirBnB model was on trending (Models page of HF) a week after its release.

As of May 2024, the fine-tuned model received over 100 downloads on Hugging Face! On average, most “reviews” models have less than 30 downloads on HF. Our AirBnB model was on trending (Models page of HF) a week after its release.